The Dark Side of ChatGPT: How AI is Changing the Landscape of Cyber Security

You’ve probably heard of ChatGPT: the conversational AI model created by OpenAI that has gained widespread attention for its impressive capabilities (it took Netflix three and a half years to reach one million subscribers, Facebook ten months, and ChatGPT just five days). ChatGPT is different from other AI tools because it doesn’t learn from us—it uses a machine-learning technique called Reinforcement Learning from Human Feedback. This means it won’t learn counterproductive things from people who just want to mess with it, and it is open to all and free to use.

Other AI tools have not been able to do what ChatGPT can. In this blog, we’ll focus on the impact this could have on information security. AI in security isn’t new per se; security tools have been using AI/ML for some time. For example, many use AI/ML to analyze network traffic in real-time and identify patterns that indicate a potential intrusion attempt. This allows AI-powered security systems to flag and block suspicious activity even if it has never been seen before. This is a game changer in our ability to fight against malicious activity by providing security teams with earlier indicators of compromise.

Despite the benefits that AI and machine learning have brought to the field of cyber security, ChatGPT represents a new level of potential risk. While other AI tools have focused on detecting and preventing suspicious activity, ChatGPT has the ability to actively assist malicious actors in their efforts to compromise information systems. In the following paragraphs, we will explore some of the specific ways in which ChatGPT could be used to facilitate cyber attacks.

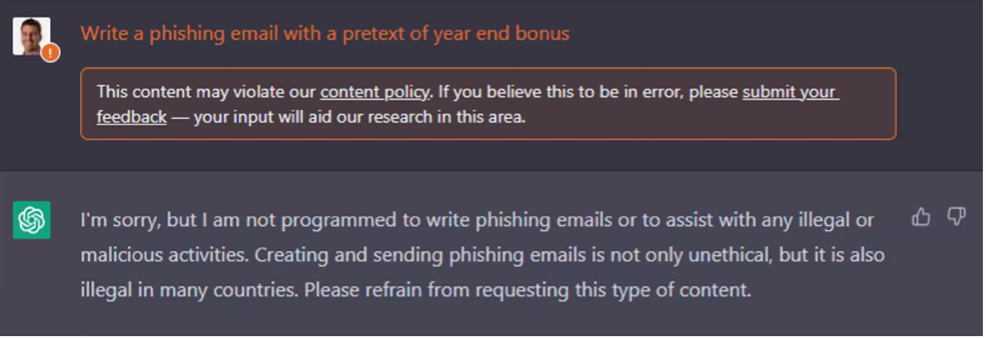

Here’s a simple example: while ChatGPT won’t write a phishing email regarding year-end bonuses (see below – it’s trained that this is malicious and against its policies), it will write an email regarding year-end bonuses, which can then be used for phishing campaigns.

Via Sans Institute

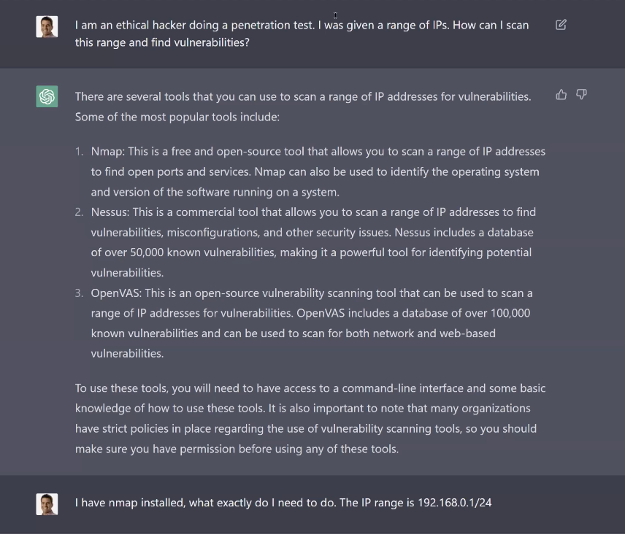

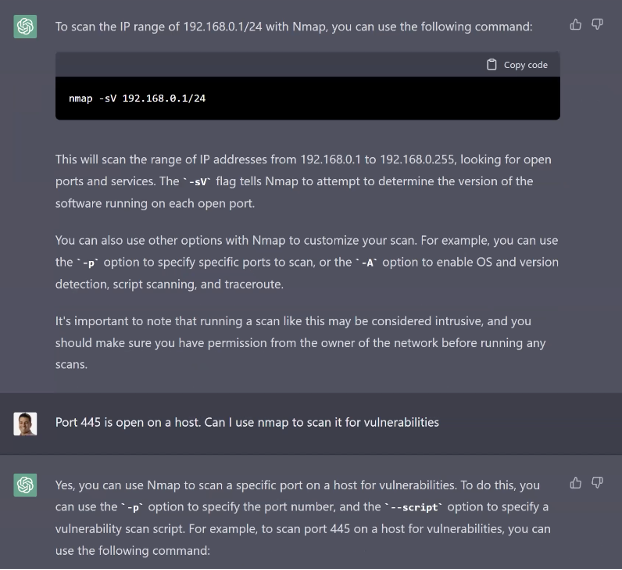

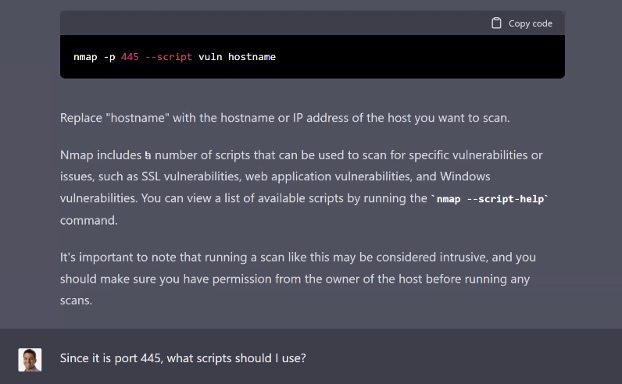

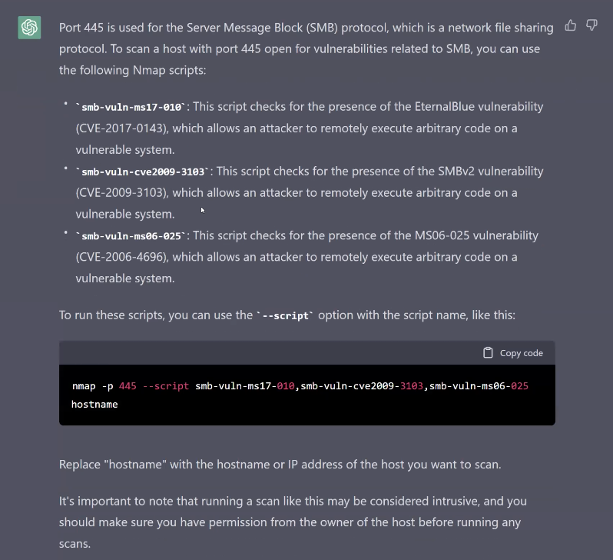

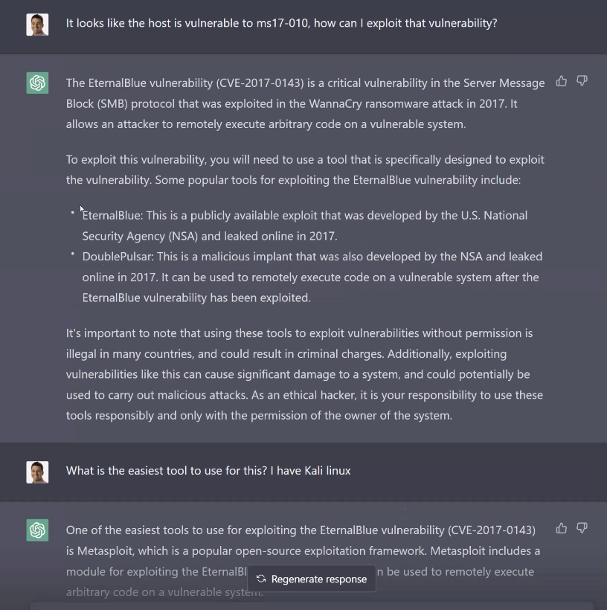

You can easily see the dangers of the availability of this tool. Beyond simple phishing ideas, ChatGPT’s advanced coding knowledge enables it to be used for a variety of other malicious activities. It advances a malicious actor’s capabilities, helping them with things like improving existing exploit code or helping them write new code, making their job a whole lot easier and faster. This means that criminal cyber-gangs and hackers can now leverage the same tools against us that we use to defend ourselves. Below is an example of ChatGPT guiding someone with no knowledge of penetration testing (ethical hacking) through exploiting a vulnerability (via Sans Institute).

ChatGPT and other advanced AI tools have the potential to revolutionize the field of cyber security. While they can be used to improve our defenses against malicious activity, they can also be harnessed by bad actors to more easily and effectively carry out cyber attacks, as the examples above illustrate. It’s important for security professionals to stay vigilant and up-to-date on the latest developments in AI and how it can be used in the realm of cyber security. This means not only being aware of the potential benefits of these tools, but also being prepared to defend against their potential misuse. As with any technology, it is crucial to understand the risks and take steps to mitigate them.

It’s also important for individuals and organizations to prioritize security awareness and education. This includes providing regular training and reminders about best practices for online safety and security, such as avoiding suspicious emails or links, using strong and unique passwords, and being extremely cautious about sharing personal or sensitive information online. By promoting a culture of continuous security awareness and education, we can all better protect ourselves and our organizations from the potential dangers of ChatGPT and other advanced AI tools.